Era of

Hardware Assisted

Native Hypervisors

( Virtual Machines )

By DIPAK K. SINGH

dipak123@gmail.com /

dipak123@yahoo.com /

dipak.singh@nectechnologies.in

Version 1.0 dated 11 June 2017.

Hardware Assisted

Native Hypervisors

( Virtual Machines )

By DIPAK K. SINGH

dipak123@gmail.com /

dipak123@yahoo.com /

dipak.singh@nectechnologies.in

Version 1.0 dated 11 June 2017.

By DIPAK K. SINGH

dipak123@gmail.com /

dipak123@yahoo.com /

dipak.singh@nectechnologies.in

Version 1.0 dated 11 June 2017.

Navigation

Use mouse click, keyboard and swipe ( in touch device) to navigate.

| Arrow keys and spacebar | To navigate through slides |

| Mouse click on navigate icon shown on right bottom | |

| Swipe left or right on touch device | |

| Escape key | To show/hide thumbnail of slides |

| Enter key | To select highlighted slide in thumbnail mode |

| Mouse click on bottom bar | To select slide as per position in bar |

Slides have been arranged horizontally. Use left and right to navigate.

Few slides have additional slides arranged vertically.

Use up and down to navigate them. Up/Down arrow of navigate icon gets highlighted for those slides.

Focus of Presentation

More and more software solutions are getting installed on either virtual machines or cloud. Core of both of approaches is invariably Hardware assisted virtualization technology.

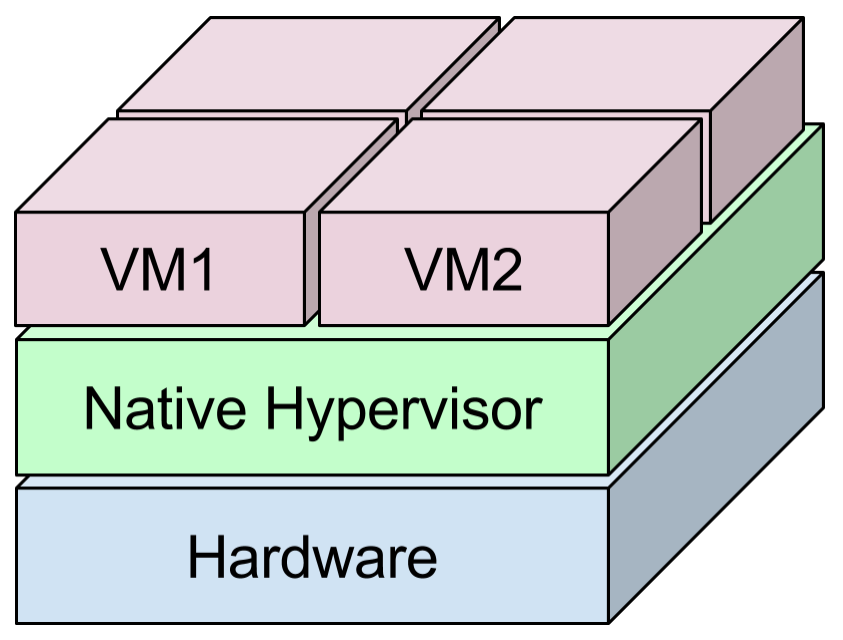

Focus of this presentation is Native Hypervisors’ running over current generation of x86_64 desktops and servers hardware.

Native hypervisor is like an OS which runs over raw hardware and used to create virtual machines.

Current generation of x86_64 hardware invariably contains CPU Intel VT-x or AMD-V along with nested page support. These technologies provide hardware assitance for virtualization.

Presentation covers

- What is hardware assisted virtualization and what is reason on its popularity?

- What are variants of hypervisors? How they perform compared to each other and raw hardware?

- How does native hypervisor market look like?

This presentation is primarily for people of technical background in computers. However, any user of computer who is familiar with virtual machine should be able to gain from the presentation.

Contents

A. Hypervisors

B. I/O Operations

|

C. Native Hypervisors in Market

D. Appendix: Recommended Reading

E. Appendix: Additional Topics

|

Section A

Hypervisors

Hypervisors

- What are Virtual Machine & Hypervisor?

- Two types of Hypervisors and its usage

- Advantages of Hypervisor

- Start of a new Era

What are Virtual Machine & Hypervisor?

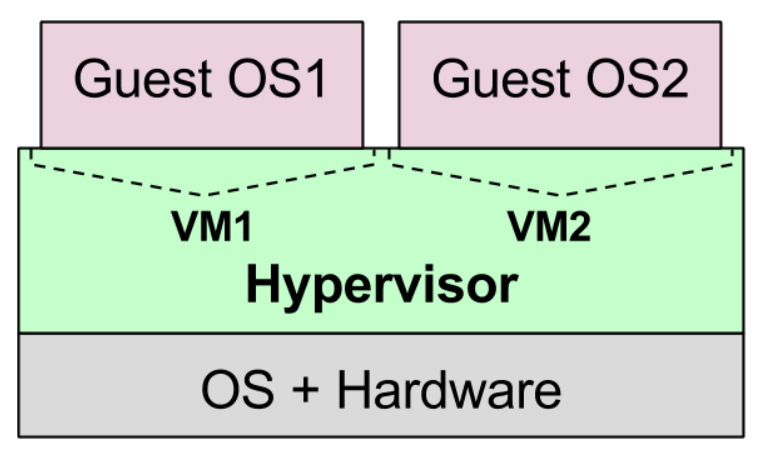

Virtual Machine (VM): An virtual environment under which an Operating System (OS) is run.

|

|

The OS running in VM is known as Guest OS. This virtual environment appears as a real hardware to the Guest OS. A hypervisor is a piece of software, which is used to create and run virtual machines. Hypervisor is also known as virtual machine monitor (VMM). One or multiple VMs can be created in a hypervisor. |

A hypervisor can run on conventional OS (like Linux, Windows etc.) or directly over raw hardware.

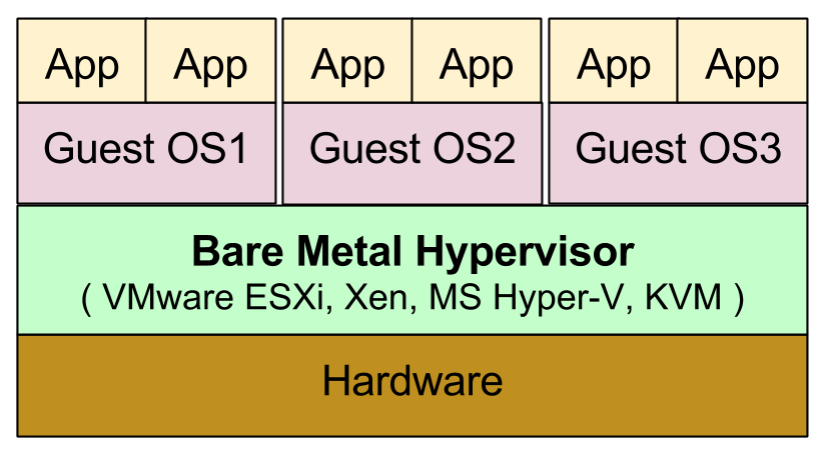

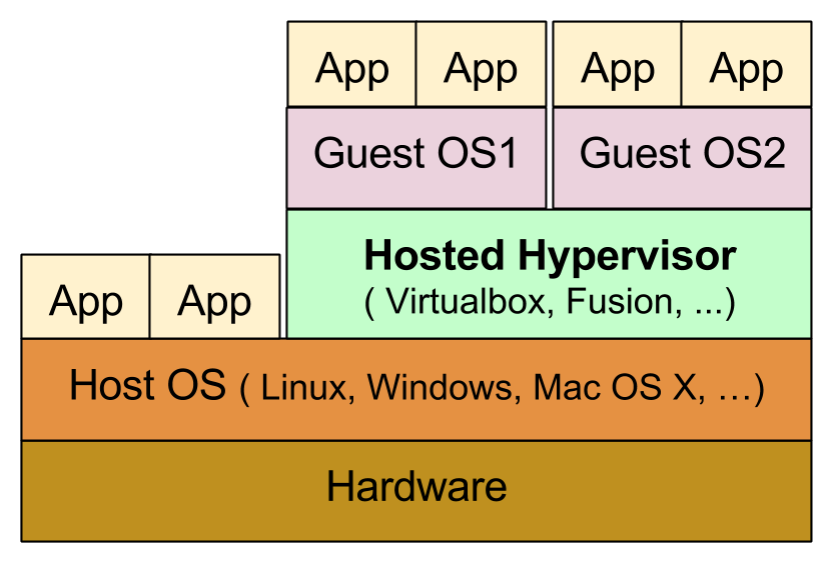

Two types of Hypervisors

|

|

It runs directly over bare metal ( raw hardware ) Examples are VMware vSphere, Microsoft Hyper-V, Xen, KVM etc. |

|

|

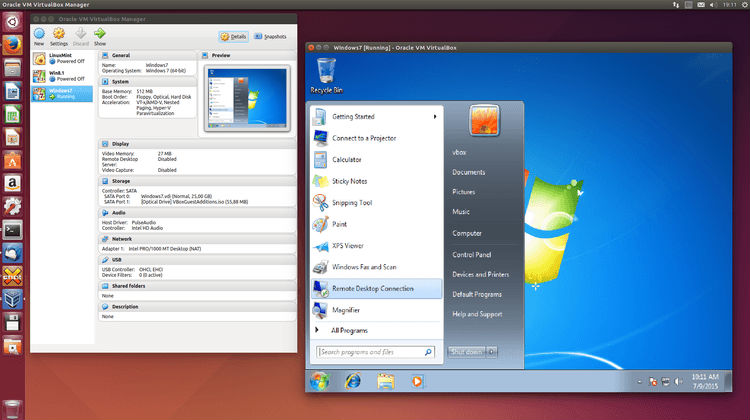

Runs over conventional operating systems like Linux, Windows, Mac OS etc. Examples are VirtualBox available on Windows, Linux and Mac OS X. Parallels Desktop for Mac OS X. Note: Even though Hosted Hypervisors’ are not in focus of the presentation but most of concepts discussed are applicable to them too. |

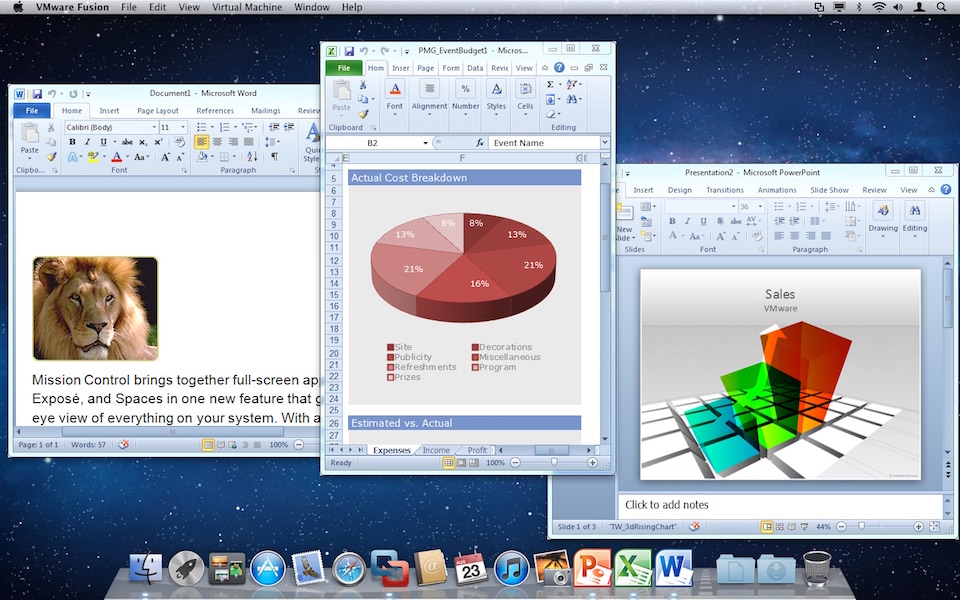

Use of Hosted Hypervisors

Applications of Host OS & Guest OS coexist on same screen so switching between them is convenient.

Windows on Linux |

Windows on Mac OS X |

Windows on Mac in Unity Mode |

Few Use Cases are:

- To run applications of other OS. For example running MS word on Linux using VM.

- Develop application then validate immediately and conveniently on different OSes installed using VM on same host OS. No need of separate machine.

Use of Native Hypervisors

Layer of Host OS in Hosted hypervisor contributes to performance loss. Native Hypervisors are better in this regard.

Additionally, currently available native hypervisors provide other advantages which are considered useful by data centers and enterprise users. For examples:

Better resource isolation of an VM from other VMs and hypervisor of the same machine.

VMs management by administrator using easy to use software remotely.

|

|

Because of above advantages and couple of other reasons mentioned in the next slide, server market is rapidly getting restructured such that computing resource is provided to the user in form of virtual machines created using native hypervisors. |

Advantages of Native Hypervisor over Hardware

Native hypervisors provide two main advantages over using raw hardware directly . They are:

Ease of provisioning

For example it takes couple of minutes for the administrator to provide a virtual machine. In case of problem in hardware, the virtual machines running over it could be moved to other hypervisor much easily and quickly using management software.

Efficient use of hardware

For example if three users need three hardware but only one is used at any time at best. Then it makes sense to create three virtual machines on a single hardware. That would be huge hardware cost saving .

Other major change is that Cloud Computing and Native hypervisors are helping each other. Hypervisors are invariably core of cloud computing with few exceptions like use of containers. For example Amazon Elastic Compute Cloud (EC2) is an virtual machine created using Xen based native hypervisor.

Start of a New Era

Virtual Machines are in use very successfully since ages . What has changed recently is good support from hardware making virtual machines very efficient. In fact, Hardware Assisted native hypervisors put just few percentage overhead in CPU and memory access.

Unfortunately, same can not be said for I/O and overhead depends on lot of factors including type of load.

For example

If a database user says 35% degradation after moving to virtual machine, I would be curious to know the use scenario but not shocked by the number.

On the other hand if an application to find a large prime number degrades by 5% then I would be very surprised because prime number calculation is CPU intensive activity.

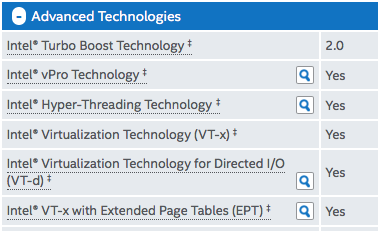

Start of a New Era - Hardware Assisted Virtualization

Around 2006, virtual machine aware CPUs Intel VT-x and AMD-V were launched. That made hypervisor implementation quite simple instead of depending fully on software for virtualization. Further improvements ensured negligible overhead in CPU and memory accesses from Guest OSes.

Two such technologies 'New Ring -1' and 'Nested memory' have been described in appendix section.

|

These technologies are defacto in currently available x86_64 CPUs. Check CPU specification to be sure. They are known as

Intel VT-x with Extended Page Table (EPT) and

AMD-V with Rapid Virtualization Indexing (RVI) |

|

Section B

I/O Operations

I/O Operations

- Emulated Devices

- Paravirtual Devices

- Hardware Assisted Devices

- Exclusively and directly connected Devices

- Virtual Machine aware Devices

I/O Operations by Guest OS

OS interacts with outside world through various types of devices like network card, hard-disk, USB etc. Multiple variants of these devices are available from one of multiple manufacturers.

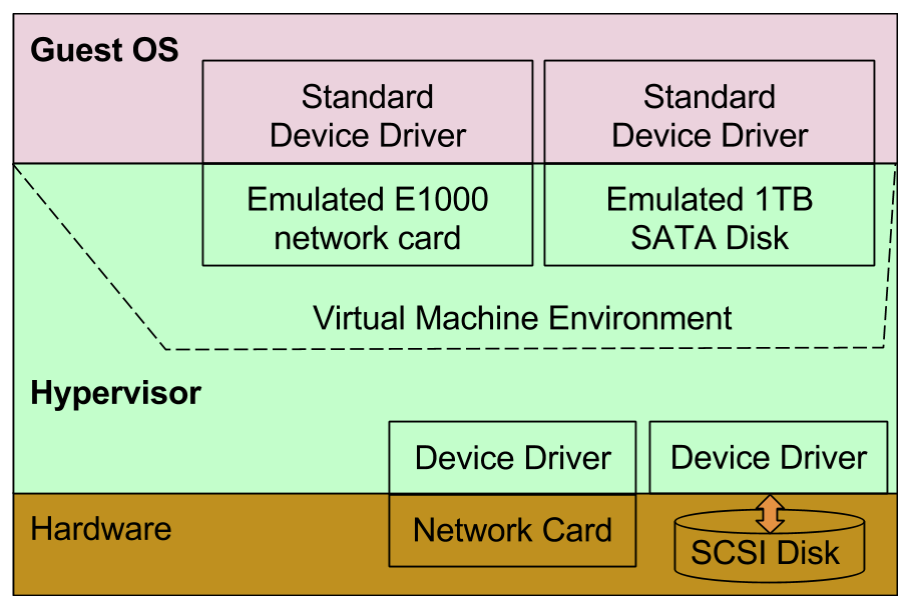

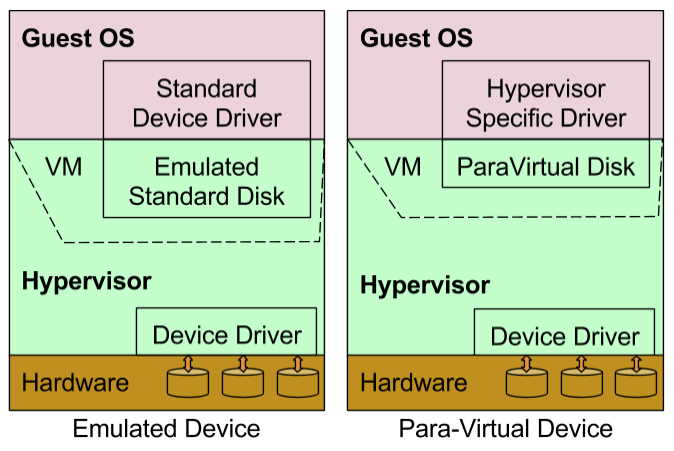

One of simplest and easiest way to support I/Os in Guest OS is by emulating standard real world devices in hypervisor for Guest OS.

|

|

In the example in LHS, an standard real world E1000 network card is emulated by hypervisor. Guest OS runs as if running on real world hardware containing E1000 network card. Guest OS is expected to have device driver for standard devices like E1000 network card. Guest OS is completely unaware of actual network card attached to hardware on which hypervisor is running. |

Device Emulation is a pure software solution and confined within hypervisor. It does not need any support from hardware or Guest OS.

Device Emulation vs Paravirtual Devices

Device Emulators are always available in hypervisor and a good solution. Sole disadvantage is that they are slow if heavy I/O is involved.

A good alternative is Paravirtual Devices. They are pure virtual devices which are created in hypervisor so that Guest OS can communicate with it very efficiently.

|

This essentially cuts down the emulation layer leading to good performance. This approach has become very popular compared to device emulation. Hypervisor specific device drivers must be added in Guest OS to enable communication with paravirtual devices. Note: On Windows, para virtual devices are known as synthetic devices and emulated devices as legacy devices. |

|

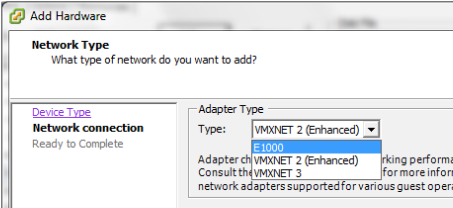

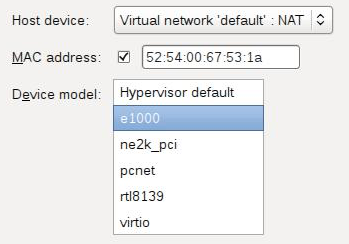

Example of Network Device

Images given below show step of specifying network during Virtual Machine creation. 'E1000' is a name of real world standard 1Gb network card. This card is emulated.

'VMXNET*' in VMware and 'virtio' in KVM are para-virtual network cards.

|

|

Device driver for E1000 are invariably per-loaded in any OS. Hypervisor specific device driver are also generally preloaded in popular Guest OSes.

Device Drivers for Paravirtual Devices

Device drivers for hypervisors are becoming preloaded in many of the OSes. Therefore, using paravirtual devices has becoming easy.

For examples:

OSS Linux now a days comes preloaded with OSS hypervisor KVM's para virtual device drivers.

Microsoft Windows now a days comes preloaded with Microsoft hypervisor Hyper-V's' para virtual device drivers.

Redhat Enterprise Linux (RHEL) 6.3 is preloaded with para virtual device drivers of VMware vSphere as well as Microsoft Hyper-V.

Paravirtual network and paravirtual hard disks are supported by them apart from many other paravirtual devices.

If driver is not pre-loaded, device driver is to be explicitly installed. For example VMware Tools for VMware hypervisor are available for Windows and most of UNIX variants.

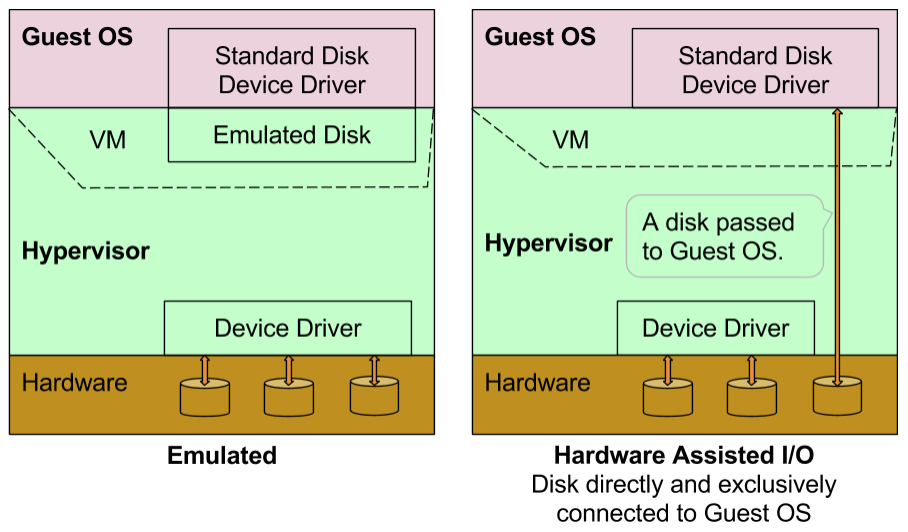

Hardware Support for I/O

Hardware level support for I/O makes performance much better and comparable to native . Two notable examples are:

-

A Device is connected to a Guest OS exclusively and directly.

For example a network card or a hard disk is made available to a specific Guest OS.Intel has named this technology Intel VT-d in which 'd' stands for direct. AMD-Vi is its equivalent from AMD.

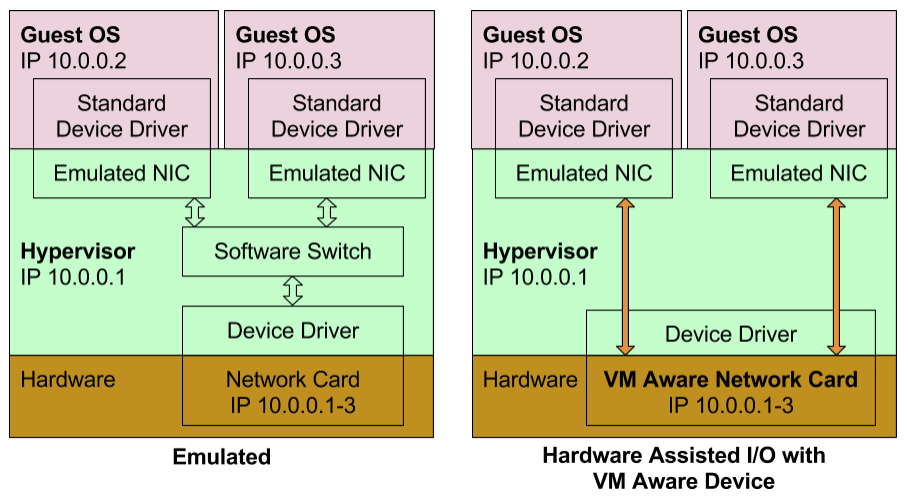

-

Virtual Machine aware Device.

For example network card is virtual machine aware . It passes the IP packets to appropriate Guest OS based on IP address assigned to Guest OS.Intel has named this technology Intel VT-c in which 'c' stands for connectivity.

In both of above technologies, hypervisor has negligible or no role except setting up devices for VMs.

Exclusively and directly connected Devices

Motherboard and hypervisor must support this functionality. No special support is required from the device.

Virtual Machine aware Devices

Support is required in device, motherboard and hypervisor for this functionality to work.

Summary of different mechanism of I/O Operations

| Emulated Device | Pure software solution. Very flexible but slow. | |||

| Para virtual Device | Performs much better than emulator | |||

| Directly connected device to VM | Near native speed possible | |||

| VM Aware Device | Near native speed possible |

Section C

Native Hypervisors in Market

Native Hypervisors in Market

- Available Hypervisors

- Comparision

- Commercial Offerings

- Virtual Machines used in Public Clouds

Available Hypervisors

Four native hypervisors are available. They are either core of other native virtualization solutions or its variants are used.

|

VMware vSphere | Gold standard in native hypervisor. Good usability, rich features and reliable solution but pricey. |

|

|

Microsoft Hyper-V | Might become a strong contender, especially among Microsoft user base. | |

|

OSS Xen | Strong installed base but not much talked anymore. KVM is likely to take over Xen. | Citrix XenServer, Oracle VM |

|

OSS KVM | Kernel based VM (KVM) is being promoted by OSS community. Good future likely. | Red Hat Enterprise Virtualization |

Comparision

All four native hypervisors are very mature and stable for creating virtual machines (VMS). All of them are being used quite successfully.

However, differences do exist in areas of efficient I/O, performance isolation of I/O of an VM from another VM, reserving resources like cpu/memory to an VM, usability etc. VMware vSphere inveriably performs better in all counts other than cost factor.

Differences also exist in non-core but useful features like VMware vMotion. vMotion allows the live migration of a VM from one machine to another. VMware vSphere is known as Gold Standard in native hypervisors for right reasons.

Out of OSS Xen and OSS KVM, both are mature, stable and usable. However, OSS community is much more active in KVM than Xen. Therefore, new uses may like to start with KVM instead of Xen.

Commercial Offerings

| Gartner's ‘Magic Quadrant for x86 Server Virtualization Infrastructure’ 2016 report for commercial-vendor-based virtualization offerings states | |

| VMware | is the leader |

| Microsoft | is strong competitor in leader segment |

| Others like Oracle, Citrix, Redhat | are niche players |

| Magic Quadrant does not rate community supported OSS like Xen and KVM but they are likely to have significant deployment. | |

As per multiple other non standard reports, share of commercial-vendor-based virtualization offerings among enterprise users in 2016 are : |

|

| VMware | about 40-50% |

| Microsoft | about 25% |

| Rest by others ( Oracle, Citrix, Redhat etc. ) | |

Virtual Machines used in Public Clouds

Machines provided by cloud computing are invariably virtual machines except in few rare cases. Hypervisors used in three most popular public clouds are:

| Amazon AWS EC2 | ||

| Microsoft Azure | ||

|

Google Cloud Platform |

|

|

OpenStack cloud infrastructure: |

Section D

Appendix: Recommended reading and References

Appendix: Recommended reading and References

- Recommended related topics

- References

- New Protection ring in CPU for Hypervisors

- Support of Nested pages in CPU for Hypervisors

Recommended related topics

Recommended related topics for further readings are:

Hypervisor specific additional features

like pause-resume virtual machine, snapshot and cloning of virtual machine.What are changes required in the application when migrating OS to VM?

In theory, at maximum device drivers are to be added in Guest OS but no change is required in the applications unless application is accessing device directly like by call of ioctl().

In addition to that pause-resume VM, if used, can have impact on application too.

Resource Isolation

If one VM is using excessive network, it would impact network bandwidth of other VMs running of the same machine. Concept exists to reserve resources.

Overcommitment of CPU

Topics of Further Reading

Recommended related topics for further readings are:

Multi-Machine management

It is too common for a organisation to have 100s of machines. For them centralized management is very useful. In addition to that, features live migration are useful too.

Are containers going to replace virtual machines?

IBM Research Report has useful information about it.

References

Few useful links related to virtualization are given below:

Section E

Appendix: Additional Topics

Appendix: Additional Topics

- Pre 'VM aware CPUs' Era

- New Protection ring in CPU for Hypervisors

- Support of Nested pages in CPU for Hypervisors

Pre 'VM aware CPUs' Era

Prior to availability of 'VM aware CPUs', two common techniques used to run Guest OS were:

Guest OS binary translation by hypervisor

Hypervisor modifies binary code of Guest OS installed in VM, then runs Guest OS. For example privileged instruction calls of Guest OS are replaced by call into hypervisor for hypervisor to take control. This has become un-necessary after support from CPU.

Guest OS is modified to run on hypervisor (Para-Virtualized Guest OS)

Guest OS is modified to replace its code which depends on privileged instructions such as memory management. Such operations instead go to hypervisor.

Guest OS becomes dependent on hypervisor for which modification was done but provides better performance than 'Guest OS binary translation' .

Amazon AWS EC2 gives option of CPU-supported and Para-Virtual VMs. However, it is promoting 'CPU-supported VMs' .

This presentation is about hardware assisted hypervisors, therefore, details of above two techniques have not been provided. Those are rarely used anyway.

New Protection ring in CPU for Hypervisors

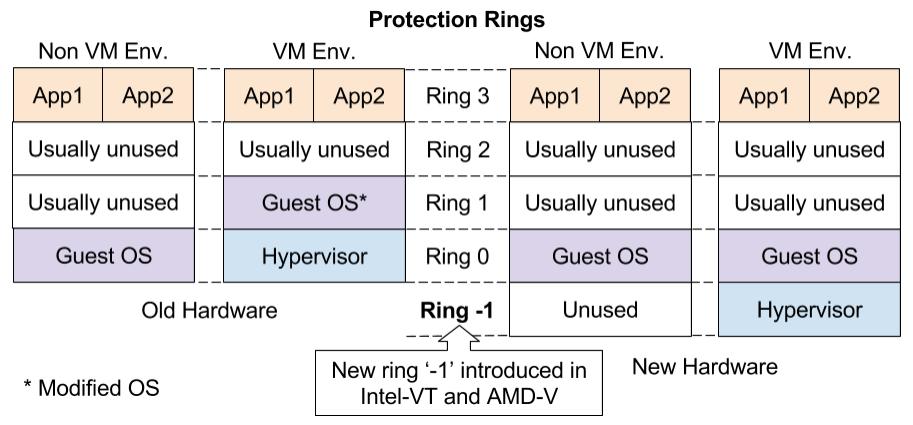

Traditionally, i86 CPUs had four protection rings/levels 0(highest) to 3(lowest). Only ring '0' was capable of running privileged instructions.

To limit Guest OS to its virtual environment, its privileged instruction calls were replaced to divert to hypervisor and Guest OS was placed at ring '1' . Running Guest OS at ring '1' ensured no interference to hypervisor or other VMs.

|

|

One improvement was introduction of a new privileged ring '-1' for hypervisor in Intel VT-x and AMD-V CPUs. Unmodified Guest OS can now run at ring '0' exactly in same manner as running on raw hardware. |

New Protection ring in CPU for Hypervisors

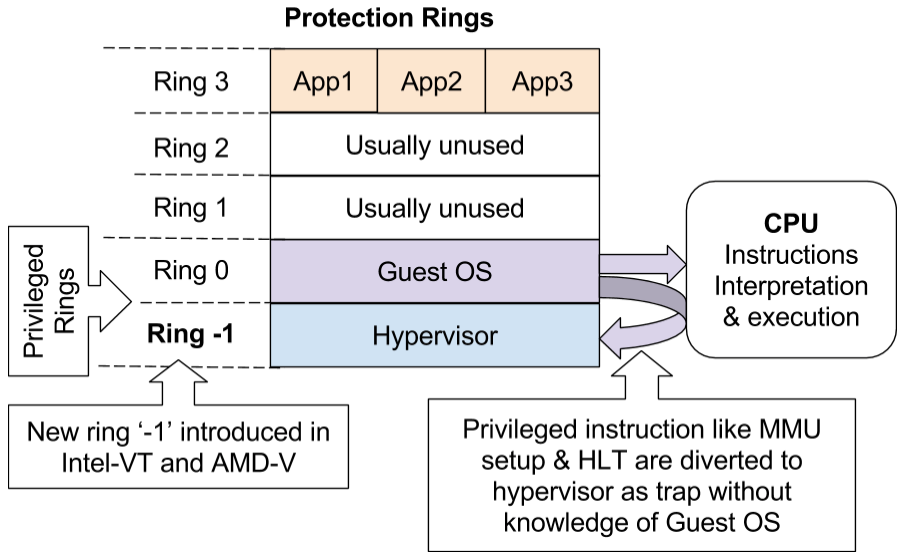

Rings '0' 'and '-1' are capable of running privileged instruction but with some difference so that hypervisor has upper hand.

|

|

Privileged instructions raised at ring '0' translate into trap to hypervisor running at ring '-1'. This way hypervisor takes control to perform its job without knowledge of Guest OS. For example privileged memory management instructions executed by Guest OS are diverted to hypervisor by CPU. |

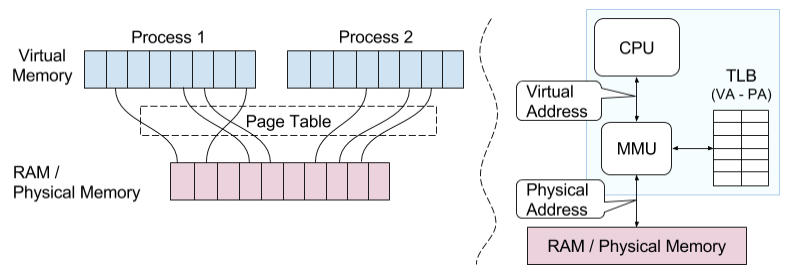

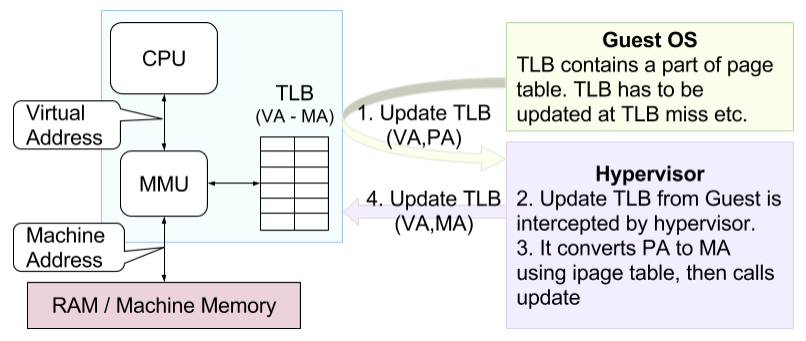

What is Page table and TLB ?

Applications in modern microprocessors run in virtual memory space. Virtual memory address is translated into physical memory address with the help of Page Table.

Page Table is managed by OS and support for translation is provided by microprocessor's MMU ( Memory Management Unit) using TLB (Translation Lookaside Buffer) for faster translation. TLB contains a part of page table in CPU and it is regularly updated as per access pattern of virtual memory addresses.

In hypervisors, Second Level Address Translation (SLAT) is required as shown in next slide.

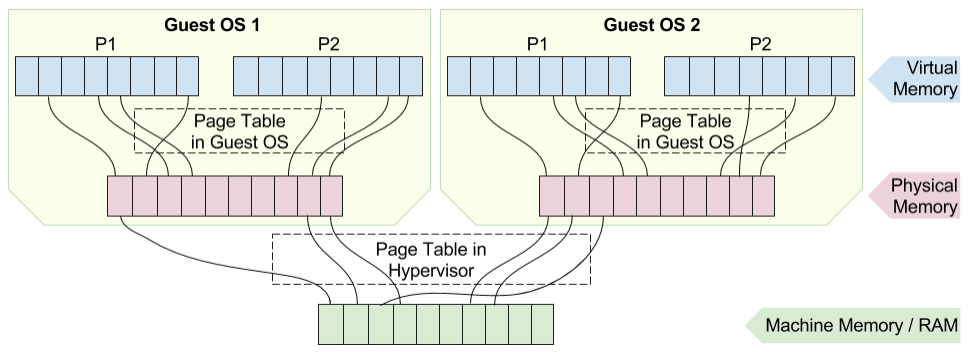

Support of Nested pages in CPU for Hypervisors

Physical address seen by Guest OS is mapped to Machine ( hardware ) Address by hypervisor using second page table.

Extended Page Tables (EPT) by Intel and Rapid Virtualization Indexing (RVI) by AMD support address translation using just one set of MMU and TLB . TLB contains mapping between Virtual address (VA) to Machine Address (MA). Details has been given in the next slide.

Support of Nested pages in CPU for Hypervisors

Maintaining just one TLB for mapping from Virtual address (VA) to Machine Address (MA) ensures translation performance same as running OS directly on raw hardware.

Page table contains mapping from VA to PA and PA to MA in Guest OS and hypervisors respectively. Whenever Guest OS updates mapping in TLB for VA to PA, underlying hypervisor intercepts the call. It replaces PA by corresponding MA then updates TLB. That is why TLB update, which occurs at TLB miss, is a costly operation in VM environment.